Reliable Test Case Generation with AI

Join this live workshop that runs for two hours. I show you how to guide Claude Code, Codex CLI, Gemini CLI, and other LLMs to write sharp Ruby tests with proven design techniques.

Led by Lucian Ghinda

I have spent fifteen years with Ruby. I build, teach, and mentor. I keep testing sharp so code and teams move faster.

Objectives

What are the objectives of this workshop?

Many developers use AI tools like ChatGPT, Cursor, Gemini, or Claude to generate tests. The output is often wrong, shallow, or skips important parts of the code. This workshop will help you answer:

Without clear prompts, LLMs guess based on loose patterns instead of sound testing principles. This workshop will teach you how to move beyond luck with test design techniques that sharpen your prompts and the tests they produce.

Intended audience

This workshop is for developers who use or plan to use AI tools for test generation.

- The main audience is Ruby developers, Rails engineers, and QA engineers who want better results from AI tools.

- Builders who already use ChatGPT, Claude, Cursor, or other LLMs and want steady output.

- People who are curious about AI assisted testing but doubt the quality and want proof.

- Developers who want faster testing without losing quality and need prompts that deliver.

- Team leads or engineering managers who want a repeatable AI testing practice for the team.

- Anyone who is curious, skeptical, or already using LLMs and wants sharper Ruby and Rails tests.

Learn

What do you learn?

You will learn how to guide LLMs on Ruby and Rails projects to:

Prompt LLMs with clear guidance, not vague "write tests" prompts.

Apply test design techniques so your prompts produce steady results.

Generate thorough test cases that cover edge cases and business rules.

Compare outputs across LLMs and see how clear prompts improve consistency.

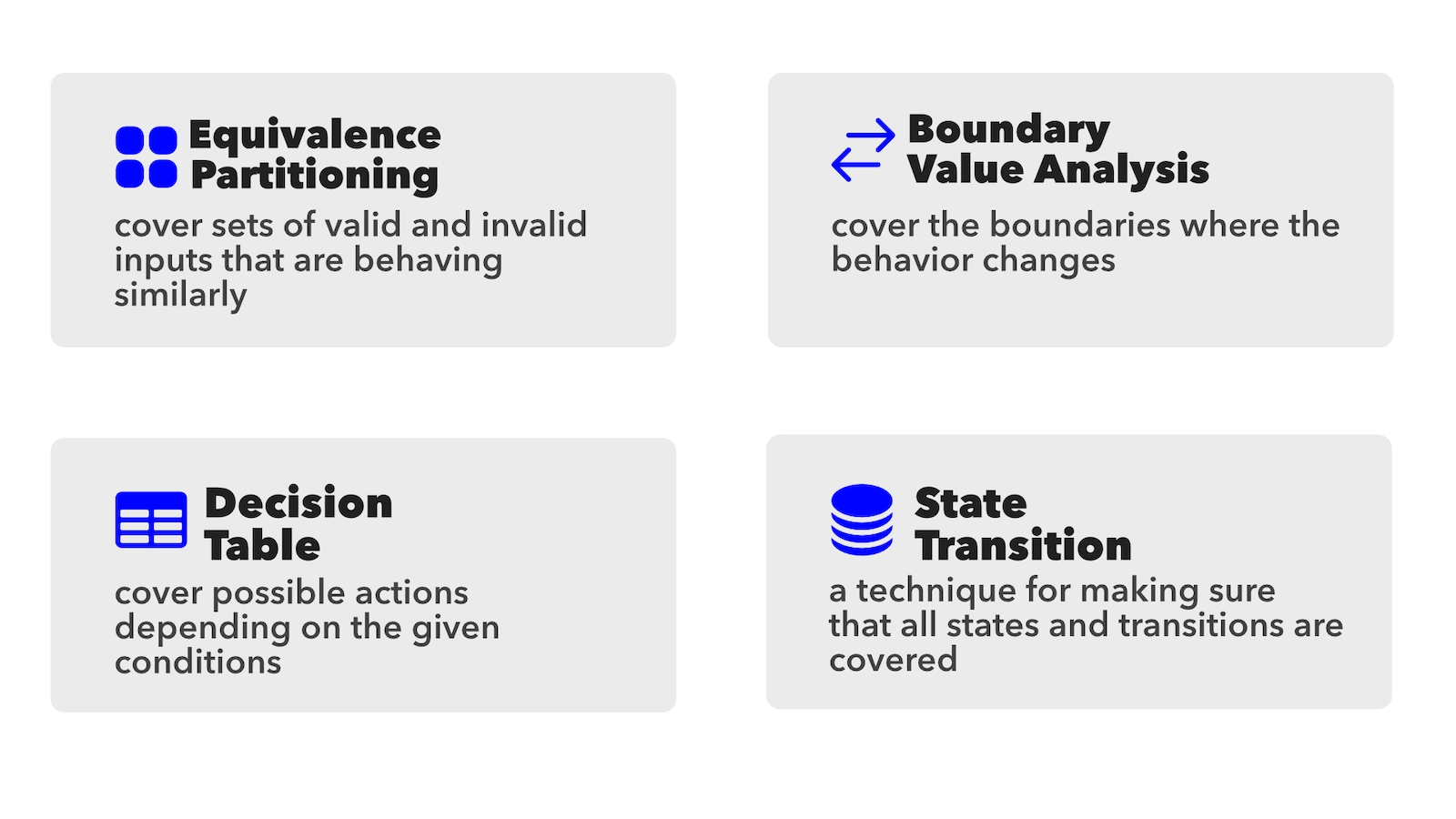

You will apply these test design techniques into your AI prompts:

- Equivalence Partitioning help LLMs group similar inputs and craft a sample test for each one

- Boundary Value Analysis ask AI tools to probe the edges that expose bugs

- Decision Tables structure prompts so LLMs cover every mix of boolean conditions in your Ruby code

- State Transition Testing guide AI tools to check each state change in your Rails apps

You will see real examples from Ruby and Rails code, compare outputs across LLMs, and leave with practical steps to make AI assisted testing systematic and steady.

Key Takeaways

What you'll walk away with

- Stronger prompts for LLMs such as ChatGPT, Claude, or DeepSeek so they generate meaningful Ruby and Rails tests.

- Clarity on why generic AI generated tests fail and how test design techniques fix that.

- Four core test design techniques (Boundary Value Analysis, Equivalence Partitioning, Decision Tables, and State Transition Testing) and how to use them into your prompts.

- Real Ruby and Rails examples with side by side LLM comparisons.

- A repeatable process you can put to work right away.

Register

Join the next live session

No Upcoming Sessions Scheduled

We're planning the next cohort of Reliable Test Case Generation with AI. Subscribe to get notified when new dates are published.

If the time does not work for you, fill out this short survey so I can plan future sessions that work better for you.

You'll hear about fresh dates, discounts, and new AI testing resources.

Testimonials

What experts are saying about the workshop

"At the moment, LLMs might go unchecked and suggest tests that are either redundant or that miss important corner cases. During the workshop, Lucian introduced useful techniques to help developers and AI reason about our tests and the problem space."

Instructor

Why learn with me?

I have worked with Ruby since 2006 and I am a certified ISTQB Trainer. Since 2013 I have led testing workshops and training sessions that help developers add structure without slowing their work.

I test ideas in real projects to see where LLMs add value, where they fall short, and how to steer them in Ruby and Rails environments where speed matters.

This workshop reflects my daily work with teams who want useful LLM generated tests. You get practical techniques that improve your workflow without hype.

newsletter

Subscribe

Subscribe to get access to free content and be notified when the next workshop is scheduled.

Usually the workshops are fully booked and it is best to register as early as possible.

FAQ

Frequently asked questions

-

Will this workshop be recorded?

Yes, this workshop will be recorded and available for download to the participants of the workshop. The recording will be accessible in the participants' area on this website where you will get access with the email that you used to register.

-

What programming language do I need to know to attend the workshop?

You need to know Ruby at least at a Junior level. This specific training will use examples from open source Ruby on Rails repositories, so familiarity with Ruby syntax and basic Rails concepts is essential.

For example, you should be comfortable reading and understanding code like this, where the valid? method returns true or false based on the account age:

class Validator def initialize(account) @account = account end def valid? return false if @account.age < 18 true end endIf you can comfortably read and understand Ruby code at this level, you'll be able to follow all the workshop examples and exercises.

-

Are there any prerequisites or software needed?

We will not execute code during the workshop as we are going to focus on understanding the fundamentals of testing and how to write effective tests.

What's more important is to make sure you have Zoom installed and your microhone, audio and video settings working. -

Is prior testing experience required?

There is no prior testing experience required. This workshop will teach you how to design test cases and how to cover requirements or code with efficient and effective tests.

-

Will this workshop teach me TDD?

Test-Driven Development is a development process where you write tests before writing the actual code. In this workshop, we will focus on test design: identifying test conditions, covering business logic or code with tests, and learning how to reduce the number of tests while maintaining high coverage.

-

How long is the workshop, and what is the schedule?

The workshop is 3 hours long with a 15 minutes break approximately in the middle. Each session has a specific starting hour that is presented on the event page.